AI Model

Tiny Language Model

TLM is a lightweight, scalable AI model designed for efficiency, affordability, and customization. With approximately 10 million parameters per instance, it is faster to train, easier to fine-tune, and ideal for industry-specific applications like healthcare, education, and manufacturing.

Unlike traditional large models, TLM operates on minimal hardware, such as general PCs or laptops, making it accessible & afforadable.

Powered by SETH Architecture

The SETH neural network underpins TLM, combines some features from Spiking Neural Networks (SNN) and Transformers. This groundbreaking hybrid improves processing efficiency while reducing energy consumption.

Internally Distributed Structure

TLM’s unique design enables seamless scalability by connecting models across TLM, SLM, and LLM ecosystems.

Models communicate and collaborate, boosting accuracy and versatility without massive resource demands.

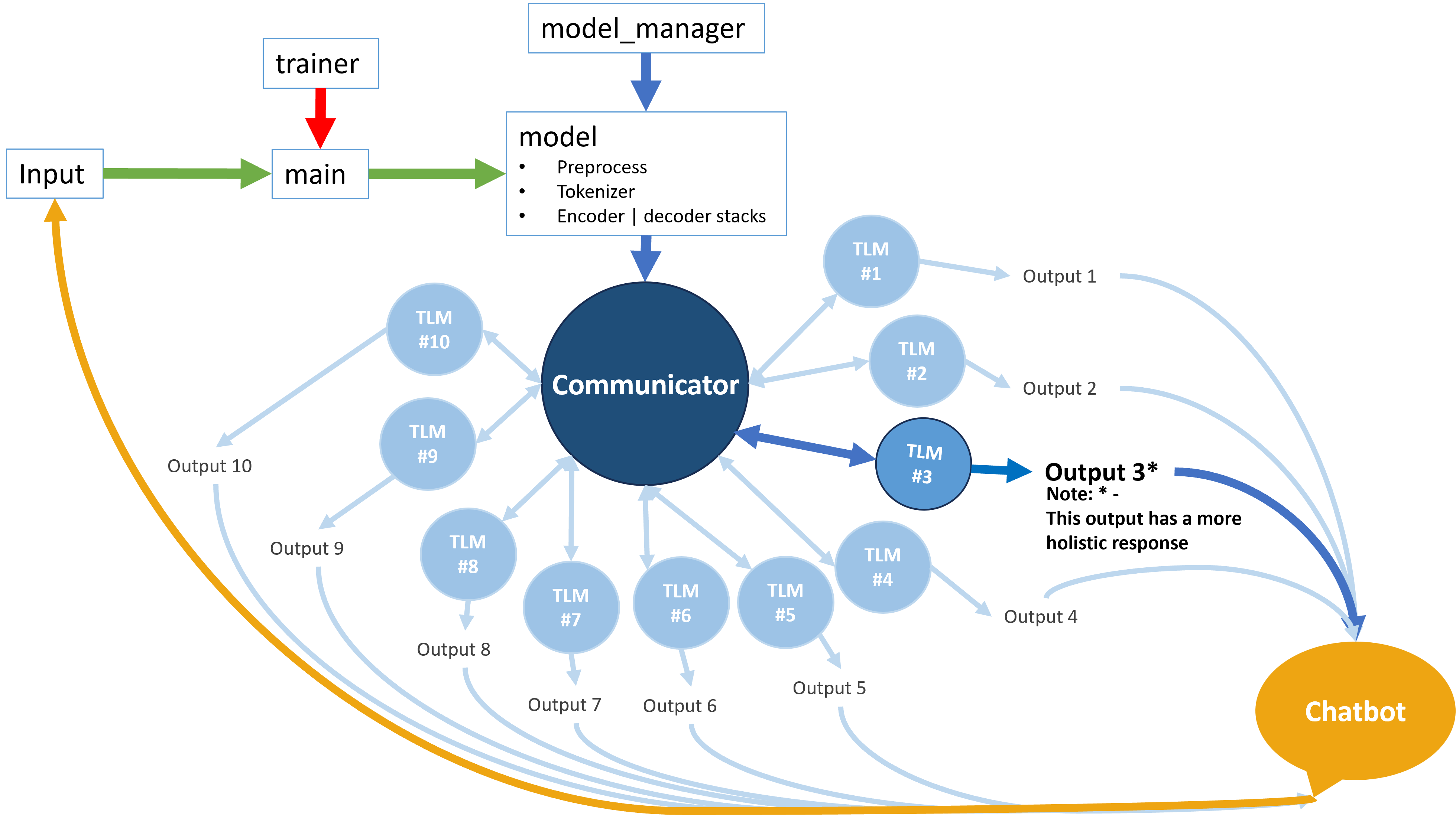

TLM Flowchart

Chatbot – Where users enter their prompts

Prompt enter the TLM system through its ‘main’ file that acts as the coordinator/ monitoring mechanism

‘model‘ its the heart of TLM comprises of preprocessing, tokenizer, encoder/decoder stacks

‘model manager’ uses ‘model’ as its basis to create multiple ‘instances’ of TLM where each instance has roughly 10 million parameters.

Created TLM instances are like ‘clones’ of the base ‘model’ and each TLM instance interact with each other via ‘communicator’ module.

‘communicator’ module routes the specific type of prompt, to relevant TLM instance.

Unlike Mixture of Experts (MoE) merely a one-way routing, TLM’s communicator compiles weights/biases from all TLM instances and re-share to all other instances.

SLM

Small Language Model

LLM

Large Language Model

Comparison Chart