SETH

STDP Enhanced Transformer Hybrid

SETH its an AI Architecture and is the cornerstone of Wildnerve’s AI innovation—a hybrid AI architecture combining the strengths of certain features from Spiking Neural Network (SNN) and Transformers to deliver a highly efficient, scalable, and adaptable solution for AI development. Designed from the ground up, SETH is optimized to require minimal GPU resources while maintaining exceptional performance, ensuring it can be deployed on affordable and accessible hardware.

SETH is engineered to reduce AI hallucination, improve accuracy, and enhance domain-specific customization. It is the foundation for Wildnerve’s suite of AI models, including TLM (Tiny Language Model), SLM (Small Language Model), and LLM (Large Language Model). This architecture powers our vision of creating AI solutions that are cost-effective, energy-efficient, and tailored for specific industries and use cases.

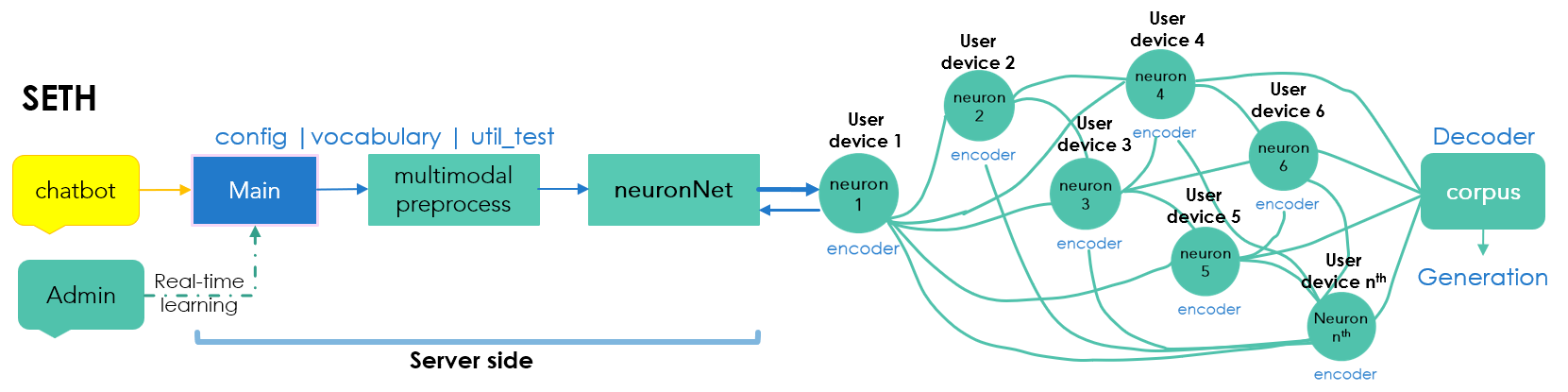

Flowchart

This architecture cascades the processed information to a network of ‘virtual neuron instance’ (VNI) which is represented by the circles in diagram above. Each of these VNI can be grouped and trained on separate domains and get different groups of VNI to interact with one another to do highly complex processing mimicking very closely of how human brain works. Our true innovation lies in the distributed nature of our architecture, where each ‘node’ (VNI) can interact synergistically, enabling our architecture to perform much more contextual inferences with less complex, less massive hardware contraption compared to conventional neural networks.